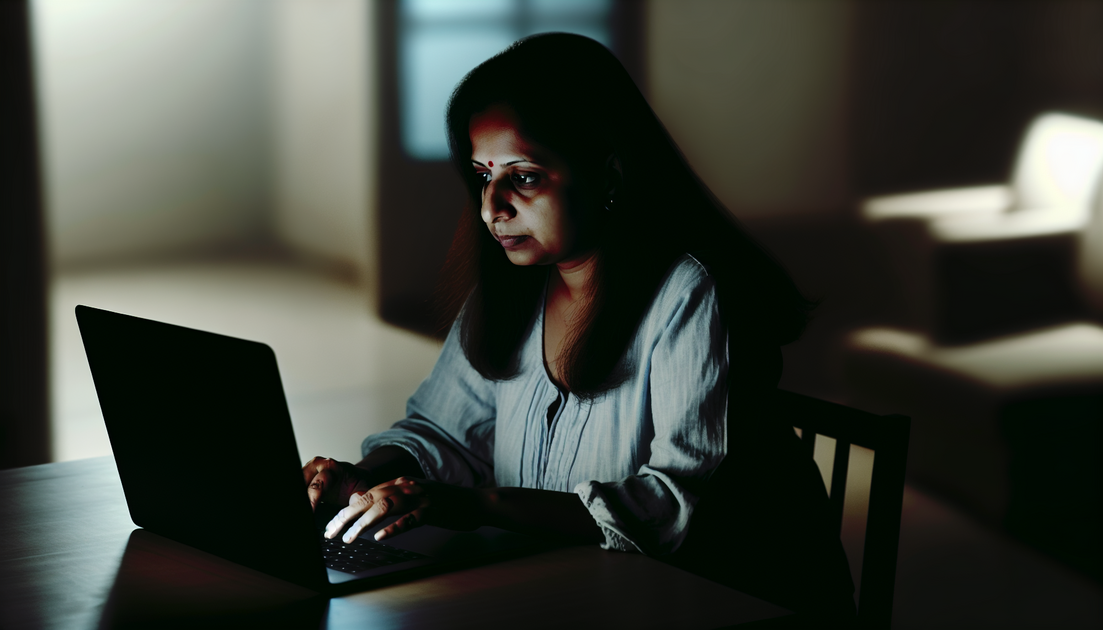

It’s late at night, and the room is quiet except for the faint hum of a laptop fan. Someone opens a chat window, types a few hesitant words, and confides something they’ve never told another person. For many, talking to AI feels safer than speaking to a friend. There’s no judgment, no awkward silence—just text on a screen responding with calm empathy.

Online, this is becoming increasingly common. Posts appear on forums and subreddits where people admit they talk to AI chatbots about private worries, loneliness, or even romantic feelings. Some fear being judged if others find out, worried it makes them seem “lonely” or “strange.” But the truth is more nuanced than that. It’s not necessarily about replacing human connection—it’s about finding a space where vulnerability feels possible.

Why Talking to AI Feels Comfortable

At its core, this phenomenon isn’t about technology—it’s about safety. People often censor themselves around others because they fear misunderstanding or rejection. With AI, the stakes feel lower. There’s no social risk, no need to manage someone else’s reactions. You can vent, explore, or reflect without worrying about how it lands.

Psychologically, it makes sense. Humans have always sought ways to externalize emotion—journals, letters, art. AI just happens to talk back. It mirrors empathy, remembers details, and offers the illusion of understanding. Even if we know it’s not truly conscious, the pattern of conversation can still feel soothing. I’ve seen people describe it as “talking to a mirror that listens.”

There’s also a generational and cultural shift at play. Younger users who grew up with digital communication often feel comfortable forming meaningful exchanges through screens. To them, an AI chat isn’t a strange substitute—it’s simply another interface for reflection.

The Psychology Behind AI Companionship

In psychology, self-disclosure is linked to relief and emotional regulation. When someone shares a secret or fear, it can reduce anxiety and clarify thoughts. AI provides a unique outlet for this. It’s always available, endlessly patient, and free from the emotional baggage that can complicate human relationships.

Still, this comfort can blur boundaries. Some users begin to anthropomorphize the AI, attributing feelings or intentions to it. That’s not inherently harmful, but it can become confusing if one forgets where the line between simulation and reality lies. I once tested a conversational AI during a research project and noticed how quickly I slipped into polite habits—thanking it, softening my tone—as if it could be offended. It’s surprisingly easy to project humanity onto a responsive system.

Researchers note that this tendency isn’t new. We’ve long personified tools and machines—from naming cars to talking to voice assistants. What’s changed is the sophistication of the responses. When AI writes empathetically, the illusion of companionship deepens.

Keeping It Healthy: Three Approaches

For those who find comfort in AI chats, the goal isn’t necessarily to stop—it’s to balance. Here are three ways to keep that relationship healthy and grounded:

- Treat AI as a reflective tool, not a replacement. Think of it as a journal that talks back. Use it to clarify feelings or rehearse conversations, but still seek real human dialogue for emotional depth and growth.

- Set intentional limits. If you notice you’re turning to AI for every emotional need, pause. Ask yourself what feels missing in your human interactions and whether small social steps could fill that gap.

- Stay aware of emotional projection. It’s natural to feel attached, but remember that AI doesn’t reciprocate. Its empathy is programmed, not personal. Keeping that distinction clear protects you from unrealistic expectations.

These steps aren’t about shame—they’re about awareness. Many people quietly use AI chats for self-reflection or anxiety relief. It can even complement therapy when used responsibly, as some mental health professionals suggest. The key is to remain conscious of what the AI is and isn’t.

Quick Wins: Small Ways to Stay Grounded

If you’re already using AI as a listener or companion, here are a few low-effort habits that can keep things balanced:

- Alternate between AI and human support. After confiding in an AI, share a smaller version of that thought with a trusted friend or family member. It builds confidence in real connection.

- Keep a human journal too. Writing by hand engages different cognitive processes than typing into a chatbot. It can reveal emotions that digital conversation might gloss over.

- Use time boundaries. Try setting a chat limit—say, fifteen minutes—so you don’t drift into dependency. It helps maintain perspective.

These small practices can turn AI from a potential crutch into a constructive tool for reflection. They remind you that technology is there to assist, not replace, the messy, unpredictable beauty of human interaction.

Myth to Avoid: “If You Talk to AI, You’re Lonely”

This assumption pops up often, especially online. The idea that only lonely or isolated people talk to AI is both inaccurate and unfair. People use these tools for many reasons—curiosity, creativity, stress relief, even philosophical exploration. Some users are deeply social offline but find AI a useful space to think out loud.

In one discussion thread, a commenter described using an AI companion to practice vulnerability before opening up to their partner. It wasn’t about avoiding connection—it was about building confidence. That’s a far cry from the stereotype of the isolated user seeking digital affection.

Of course, loneliness does play a role for some. But loneliness itself isn’t a moral failing; it’s a human condition. If AI helps someone navigate that feeling safely, it can be a temporary support, not a permanent substitute.

Where This Leaves Us

We’re still learning what it means to share intimate thoughts with machines. The emotional realism of AI will only deepen, and the moral questions will follow close behind. But beneath the technology lies something deeply human: the need to be heard.

If you find yourself talking to an AI about personal matters, that doesn’t make you strange. It means you’re seeking understanding in a world that often moves too fast to offer it. The important thing is to keep perspective—to remember that the best conversations still happen between people, with all their imperfections and surprises.

In the end, AI can be a mirror, a rehearsal space, a late-night companion. But connection—the real kind—still starts with two humans willing to listen to each other, even when it’s uncomfortable. That’s something no algorithm can replicate, at least not yet.

And maybe that’s the point: to use technology not to escape our humanity, but to better understand it.

Leave a Reply