Late one evening, while scrolling through r/Futurology, I realized something felt off. Posts looked polished but strangely hollow—grand headlines about “new discoveries” that didn’t link to real studies, comment sections filled with generic praise or oddly phrased rebuttals. It was all surface. That’s when I stumbled upon a thread titled “AI Slop Is Ruining Reddit for Everyone.” The phrase stuck with me. And it captured what many users now whisper about: AI slop on Reddit—the creeping flood of auto-generated content that looks human until you start reading closely.

For years, Reddit stood out as one of the last authentic spaces online—a messy but genuine conversation between strangers. Now that authenticity feels at risk. Moderators are overwhelmed. Users second-guess whether a post was written by a person or stitched together by a language model trained on their own comments.

We’re not just talking about bad posts; we’re talking about an erosion of trust at scale. Here are seven emerging realities from this strange new frontier.

1. “Slop” Isn’t Just Spam—It’s Simulation

The term “slop” started as a joke—a way to describe generic images or bland copy churned out by algorithms. But on Reddit, it signals something deeper. AI-generated posts often mimic enthusiasm perfectly: they start with emotional hooks and sprinkle in buzzwords like “breakthrough” or “revolutionary.” What they lack is texture—the small inconsistencies and lived experiences that make human writing believable.

I’ve noticed that even when these posts aren’t factually wrong, they feel wrong. They collapse nuance into a glossy sameness, leaving readers vaguely unsatisfied without knowing why. It’s not misinformation exactly—it’s disinformation’s quiet cousin: content that says nothing but takes up space.

2. Moderation Can’t Keep Up with AI Slop on Reddit

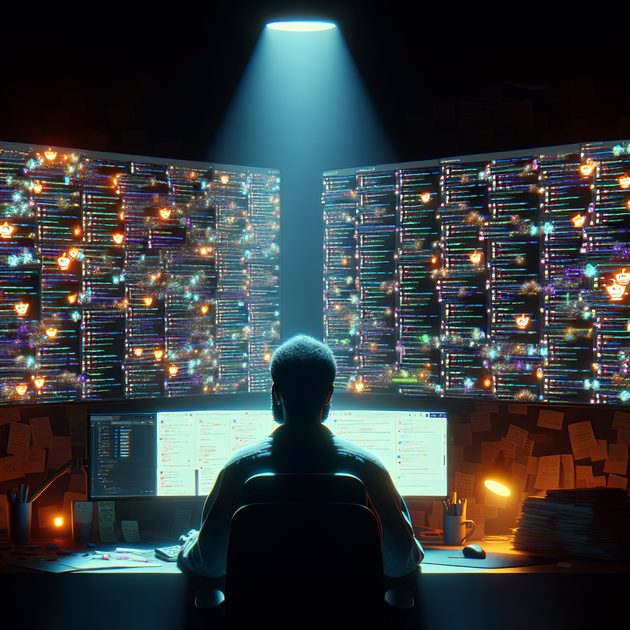

Reddit moderators have long been volunteers—the unpaid custodians of countless subcultures. In threads discussing this issue, some describe sleepless nights trying to filter hundreds of suspect submissions daily. Tools exist to flag bots or plagiarism, but detecting well-written synthetic text is another matter entirely.

One moderator described reading dozens of posts that were “technically fine” but eerily formulaic—each using similar rhythm and phrasing as if drafted by the same invisible author. When they banned accounts posting them, more appeared within hours under new names. It’s an arms race fought with unpaid labor against tireless machines.

3. The Micro-Story of a Lost Thread

A few months ago, a long-time member of r/AskHistorians shared a thoughtful answer about medieval trade routes. Within minutes, three near-identical replies appeared—each slightly reworded by an AI tool trying to mimic expertise. The original poster deleted their comment in frustration, assuming someone had copied them for karma points. Other users jumped in to defend them, but the conversation had already collapsed under suspicion.

That moment—tiny as it seems—captures something fragile about online community life. When you can’t tell who’s real, even genuine voices start to retreat.

4. AI Slop Erodes the Reward System

Karma points once represented appreciation from peers; now they can be gamed with automation. Accounts running simple text-generation scripts can rack up thousands of upvotes by producing agreeable summaries or optimistic predictions—especially in subreddits like r/Futurology where positive speculation thrives.

This dynamic distorts what gets seen and valued. Instead of deep discussions or rare insights rising to the top, algorithmic echoes dominate. I’ve watched excellent analyses sink beneath waves of cheerful but empty posts that hit every popular keyword without saying anything new.

5. Authenticity Is Becoming a Moderation Strategy

Some communities are responding creatively rather than defensively. A few moderators now encourage “proofs of humanity”—little cues like sharing personal anecdotes or photos related to a post’s topic (without violating privacy rules). Others require sources for every claim or ban any submission lacking context beyond a single image or paragraph.

This shift reframes authenticity as something deliberate rather than assumed. Users must show traces of themselves—a perspective, memory, or question no machine could fabricate convincingly yet.

6. Reddit Reflects a Larger Cultural Drift

The rise of synthetic content isn’t unique to Reddit—it mirrors what’s happening across the web. Search results fill with AI-written blogs optimized for clicks; product reviews blur into recycled language; even art forums face floods of auto-generated images claiming originality.

But Reddit stings more because it always felt alive—a living record of thought in motion. Losing that humanity there feels symbolic, as if the collective voice we built over decades is being ghostwritten by predictive text engines trained on our own words.

There’s irony here too: some users turn to AI tools themselves just to keep up—to summarize dense threads or generate quick responses when debates move too fast. In fighting the tide, we sometimes add to it.

7. The Future May Depend on Rebuilding Trust

No single fix will stop AI slop from spreading; platforms evolve slower than technology does. But communities can still choose how they respond. Transparency helps—labeling suspected automated posts rather than deleting them outright invites discussion instead of paranoia.

I suspect we’ll also need new literacy skills—not just spotting fake news but sensing synthetic tone. Learning to recognize when text lacks fingerprints of experience might become as basic as noticing Photoshop edits once did for images.

And maybe the next version of Reddit will blend machine tools with human ethics more thoughtfully—where automation supports moderators instead of overwhelming them.

A Fragile Experiment in Humanity

The battle against AI-generated clutter might sound technical, but at heart it’s emotional—a fight for trust and meaning in digital spaces we once called home. Every authentic comment now carries extra weight simply because it’s real.

As I scrolled back through that late-night thread about “AI slop,” I noticed something hopeful tucked between complaints: people were still talking earnestly about how to fix it together. For all its chaos and clutter, that human impulse—to protect conversation itself—remains stubbornly alive.

If Reddit can hold onto that impulse amid the noise, maybe authenticity isn’t doomed after all; it’s just learning how to survive in a world where even sincerity can be simulated.

Leave a Reply