The AI Slop Drops Right from the Top: What a White House Deepfake Means for Us All

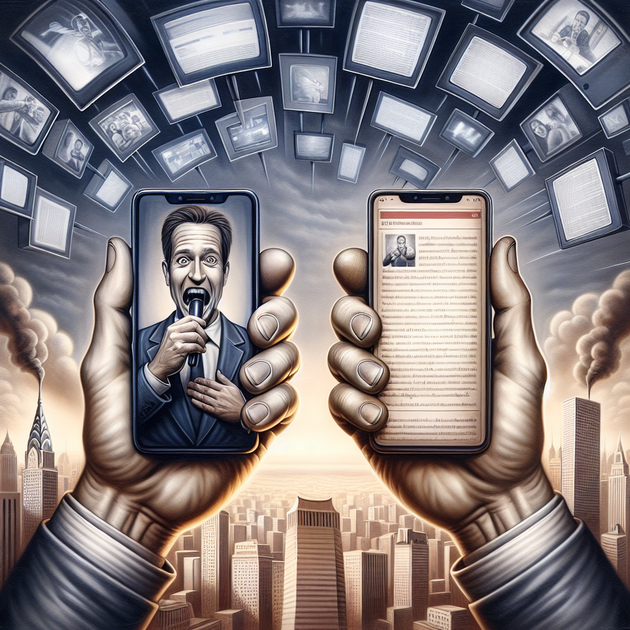

Is artificial intelligence making it harder to tell what’s real—especially when even top officials get involved? That’s the question many are asking after the latest controversy involving “AI slop,” sparked by the White House itself posting a vulgar deepfake targeting political opponents.

What Happened with the White House Deepfake?

The term “AI slop” is getting thrown around more these days for a reason. On Reddit and across social media, people were shocked to see claims that the official White House account had shared a digitally manipulated video—a deepfake—that used vulgarity to mock its opposition. While the exact context is still emerging (and sources are being debated), the story took off because it hit at two big anxieties at once: government credibility and artificial intelligence gone wrong.

Deepfakes aren’t new—they’ve been circulating online for years—but seeing one allegedly come from such a high-profile source is what made this so alarming. Suddenly, what felt like fringe internet trouble is now center stage in national politics.

Why Is ‘AI Slop’ Such a Big Deal Now?

When people say “AI slop,” they mean content generated by artificial intelligence that’s low-quality or outright misleading. In this case, it wasn’t just any meme or video—it was something that looked official and carried the weight of authority behind it.

Let’s break down why everyone’s talking about this:

- Trust Issues: If even governments share questionable content, who can we trust online?

- Speed of Spread: Deepfakes go viral fast—before fact-checkers can catch up.

- Impact on Elections: Manipulated videos could sway opinions or even votes.

- Difficulty Spotting Fakes: Technology keeps improving; our eyes can’t always tell what’s real anymore.

- Accountability Gaps: It’s often unclear who made or shared these fakes first.

This incident with the White House and alleged “AI slop” isn’t just about one video—it’s about how quickly our information landscape is changing.

The Growing Problem of Political Deepfakes

Political deepfakes have already caused problems around the world. In recent elections from Europe to Asia to America, fake videos have popped up featuring politicians saying things they never actually said. Sometimes these are obvious jokes or parodies—but sometimes they’re convincing enough to fool millions.

A friend recently shared an example from their own family group chat. Someone posted a “news clip” showing a well-known senator making outrageous claims. It turned out to be entirely fake—a product of generative AI—and no one realized until someone fact-checked it hours later. Imagine that same kind of confusion happening on a massive scale when an official government account gets involved.

The Ethics and Dangers of Official-Looking Deepfakes

It’s easy to shrug off most internet memes as harmless fun. But when public figures or institutions like the White House are accused of sharing deepfakes—even if unintentionally—the stakes get much higher.

Here’s what experts worry about:

- Erosion of Trust: If we can’t believe official communications, democracy itself takes a hit.

- Polarization: Fake videos can inflame divisions between groups or parties.

- Misinformation Floods: The sheer volume of “AI slop” makes it hard for real news to stand out.

People across all sides are now calling for clearer guidelines on using AI-generated media—especially in politics.

Moving Forward: How Can We Spot ‘AI Slop’ and Stay Informed?

The good news? There are ways to protect yourself from falling for fake content:

- Check video sources before sharing them—look for official verification marks.

- If something seems too outrageous or perfectly timed to stir up anger, dig deeper before believing it.

- Use reverse image/video search tools when you suspect something might be fake.

- Stay updated on new tech that helps spot manipulated media automatically.

It comes down to skepticism—not cynicism—and making sure we don’t let “AI slop” cloud our judgment.

An Anecdote on Digital Doubt

Remember that confusing moment when your favorite celebrity trended for saying something totally out-of-character—only to find out it was a digitally faked video? Multiply that feeling by millions when it happens with political leaders or trusted institutions. That’s why this story strikes such a nerve—it shows just how fragile our sense of reality has become in the age of advanced AI.

A Tipping Point for Digital Trust?

Whether or not you follow politics closely, episodes like this force all of us to rethink what we trust online—from silly memes all the way up to official statements. As “AI slop” drops right from the top levels of power, will society demand better rules—or just adapt by becoming even more skeptical?

How do you think we should balance technology’s benefits with its risks in shaping public opinion?

Leave a Reply